Most “AI risk” conversations in large organisations start in the wrong place.

They start with: “Can we trust AI?”

That question sounds sensible, but it hides a contradiction that every leader lives with daily: we already trust an army of uncontrolled entities – humans – to make changes to production systems. We are inconsistent. We misunderstand requirements. We have good days and bad days. We leave. We improvise. We ship bugs.

And yet the world keeps turning.

So the real question is not whether AI is trustworthy in some abstract sense. The real question is this:

What makes humans acceptable risk in software delivery, and can we wrap AI in the same kind of controls?

Once you look at it that way, the path forward becomes less philosophical and more operational.

Organisations don’t trust people, they trust the system

In any organisation that ships reliably, we don’t “trust” engineers to always be correct. We assume the opposite: people will make mistakes, especially under time pressure, ambiguity, and complexity.

What we actually trust are the mechanisms that bound human error:

- Peer review and change approval

- Automated tests and quality gates

- Static analysis, scanning, and policy checks

- Staged rollouts, canaries, and monitoring

- Incident response and postmortems

- Access controls and segregation of duties

- Audit trails and accountability

In other words, we trust the factory.

This matters because it reframes AI adoption from a moral debate into a delivery design problem. If your factory is weak, AI will amplify chaos. If your factory is strong, AI becomes another worker that can be constrained, audited, and measured.

Why AI feels scarier than humans

If humans are less predictable, why do risk-averse organisations tolerate them more than AI?

Because humans are easier to understand, challenge, and hold accountable in ways AI often isn’t.

Humans share context you never fully wrote down:

- Why this requirement matters

- What “good enough” means for this team

- What will upset customers, regulators, or internal stakeholders

- When to stop polishing and ship

- When something “feels wrong” even if it passes the formal spec

Humans also carry organisational accountability. When a change causes harm, you can ask: who approved this, what was the rationale, what changed, what did we learn?

AI flips those properties:

- It can look confident while being wrong.

- It can be correct for reasons you can’t explain.

- It doesn’t naturally know your priorities unless you encode them.

- Accountability gets fuzzy fast (who is responsible: the dev, the tool owner, the vendor?)

That’s not a reason to avoid AI. It’s a reason to adopt it through controls that restore clarity and accountability.

The control problem is not new. Agile exists because we rarely know what we want

There’s a deeper issue that sits underneath the fear: steering.

Organisations often assume that software development is “translate requirements into code.” In reality, it’s discovery. Requirements are incomplete. Constraints emerge late. Tradeoffs become visible only once you build something. This is why Agile methods exist: to make learning cheap and constant.

Humans are good at discovery because they push back, ask questions, and clarify intent.

AI, by default, does something else. It generates plausible progress. It will fill in gaps. It will guess. It will happily move forward even when the problem statement is mushy.

So the practical risk is not “AI writes bad code.” The practical risk is:

AI accelerates you toward a misunderstood problem.

The fix is not to demand perfect AI. The fix is to treat intent as a first-class engineering artefact.

The pivot: stop trusting the actor, start verifying the outcome

If you want a serious, non-hyped approach to AI in software delivery, it’s this:

Shift from trusting who did the work to verifying what the work achieves and how.

This is where software has an advantage over many domains. In software, we can build verification into the pipeline. We can turn intent into executable checks.

Not perfectly. Not for everything. But far more than most organisations currently do.

Pragmatically, this means investing in three things:

1. Make intent testable

When requirements are ambiguous, don’t ask AI to “understand better.” Encode intent:

- Examples and acceptance criteria that can become tests

- Contract tests between services

- Golden path scenarios that reflect what matters

- Non-functional intent: performance budgets, reliability expectations, security constraints

This is not “more process.” It’s creating steering inputs that scale, whether the worker is a human or an agent.

2. Protect the confidence mechanisms

There is a real failure mode with agentic AI tooling: an agent can make tests pass by weakening the tests.

If you do nothing, you can end up with a pipeline that produces false confidence. That’s worse than not adopting AI at all.

The practical controls are:

- Define “protected” test suites (golden paths, contract tests, release confidence checks).

- Require stricter approvals for changes to those suites.

- Add a two-key rule: any PR that changes tests requires a second reviewer who is not the requester.

- Where needed, add external smoke checks or synthetic monitoring that the agent cannot modify.

You don’t need perfection. You need a small set of checks that stay honest.

3. Put autonomy where verification is strong

Where the work is mechanical and verification is strong, autonomy is safe.

Where intent is fuzzy and verification is weak, autonomy is dangerous.

This isn’t ideology. It’s a deployment strategy.

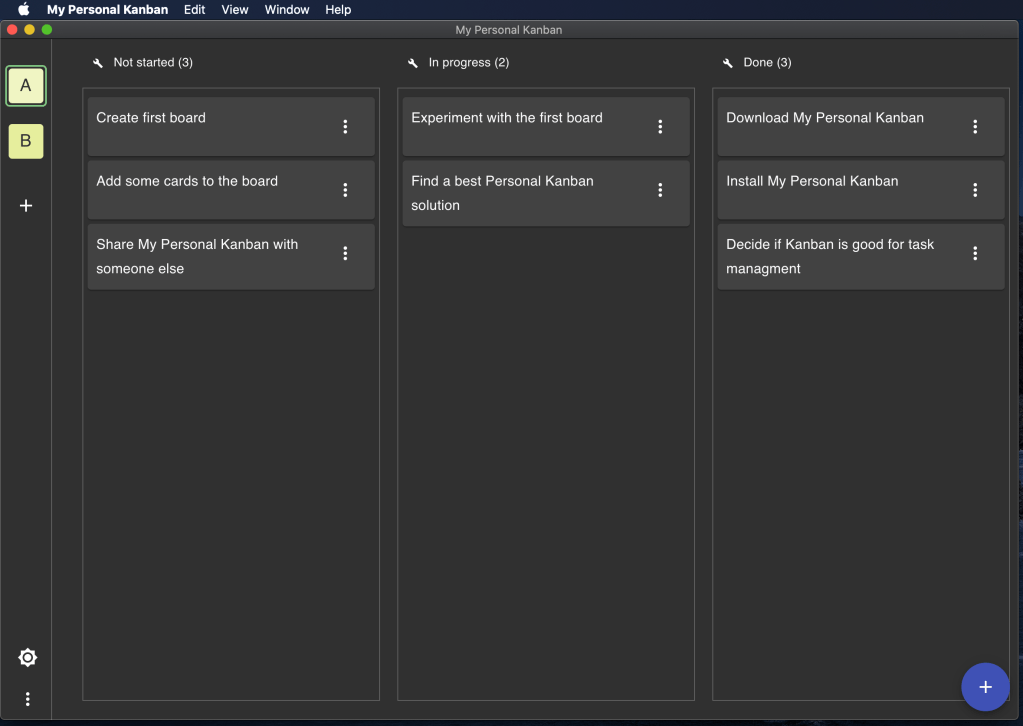

What this looks like in practice: “permission lanes” for AI

Large enterprises get stuck when AI is treated as a single decision: “Are we doing AI or not?”

A better model is permission tiers – how much autonomy do we grant, where, and under what controls.

A pragmatic set of lanes looks like this:

- Lane 0 (Assistive): AI suggests, summarises, drafts. Humans do and decide.

- Lane 1 (PR Agents): AI can create branches and open PRs. Humans approve. AI never merges.

- Lane 2 (Pipeline Assist): AI can triage CI failures, assemble release notes, recommend. It cannot bypass gates or deploy.

- Lane 3 (Ops Assist): AI can gather signals, draft runbooks, propose mitigations. No production write actions without explicit human confirmation.

Most risk-averse organisations can stop at Lane 1 for a long time and still get real value, because Lane 1 is where toil lives and blast radius stays small.

Start with the least controversial win: dependency and security upgrade PRs

If you want a first “agentic” use case that makes sense to engineering leaders, it’s this:

A PR-level agent that creates dependency and security upgrade pull requests, with evidence attached.

It is least controversial because it automates effort, not decision-making.

Why it works:

- It’s repetitive, high volume work that teams delay.

- It directly reduces known security exposure.

- It produces measurable outcomes (time-to-remediate, backlog health).

- It fits neatly inside existing controls: PRs, reviews, gates, audit logs.

The rule is simple: the agent can work overnight, but it cannot ship anything. It can only propose changes that pass the same gates humans must pass.

The real AI enabler is not the agent, it’s the factory

You can’t bolt AI onto a chaotic SDLC and expect good outcomes.

AI surfaces weaknesses that were already there:

- inconsistent quality gates

- weak tests

- unclear ownership

- fragmented CI/CD tooling

- missing standards and docs

If you want safe AI adoption, your early investment is not “pick the best model.” It’s:

- define a minimal baseline for quality

- standardise the feedback loop

- codify engineering intent into artifacts the pipeline can enforce

Then AI becomes a multiplier for an already controlled system, not a wildcard thrown into production.

If you were hoping for a magic tool or model, there isn’t one. Fix the factory first, then AI becomes a multiplier.